As with all things in life, the only constant is change itself. A couple of years ago, I wrote a blog post about my website and the evolution that it has gone through over the years. I have come to learn in my journey as a security engineer that it's better to make progress in accomplishing something by creating a minimum viable product and then continue to iterate and make that product better constantly and gradually. It's easier to do than it is not to release something and wait for it to be perfect. I've read that "perfection is the enemy of progress." (PEOP)

Background

In 2017, I switched from a web-hosting platform that wasn't very costly to a "serverless" architecture that costs me pennies to maintain and operate every month. However, one of the downsides to running such a system was managing the site with pure HTML, JavaScript, and CSS. If I wanted to implement things like a blog, or anything that would be more complex, it requires many code changes and pushing objects straight to S3.

Core Implementation

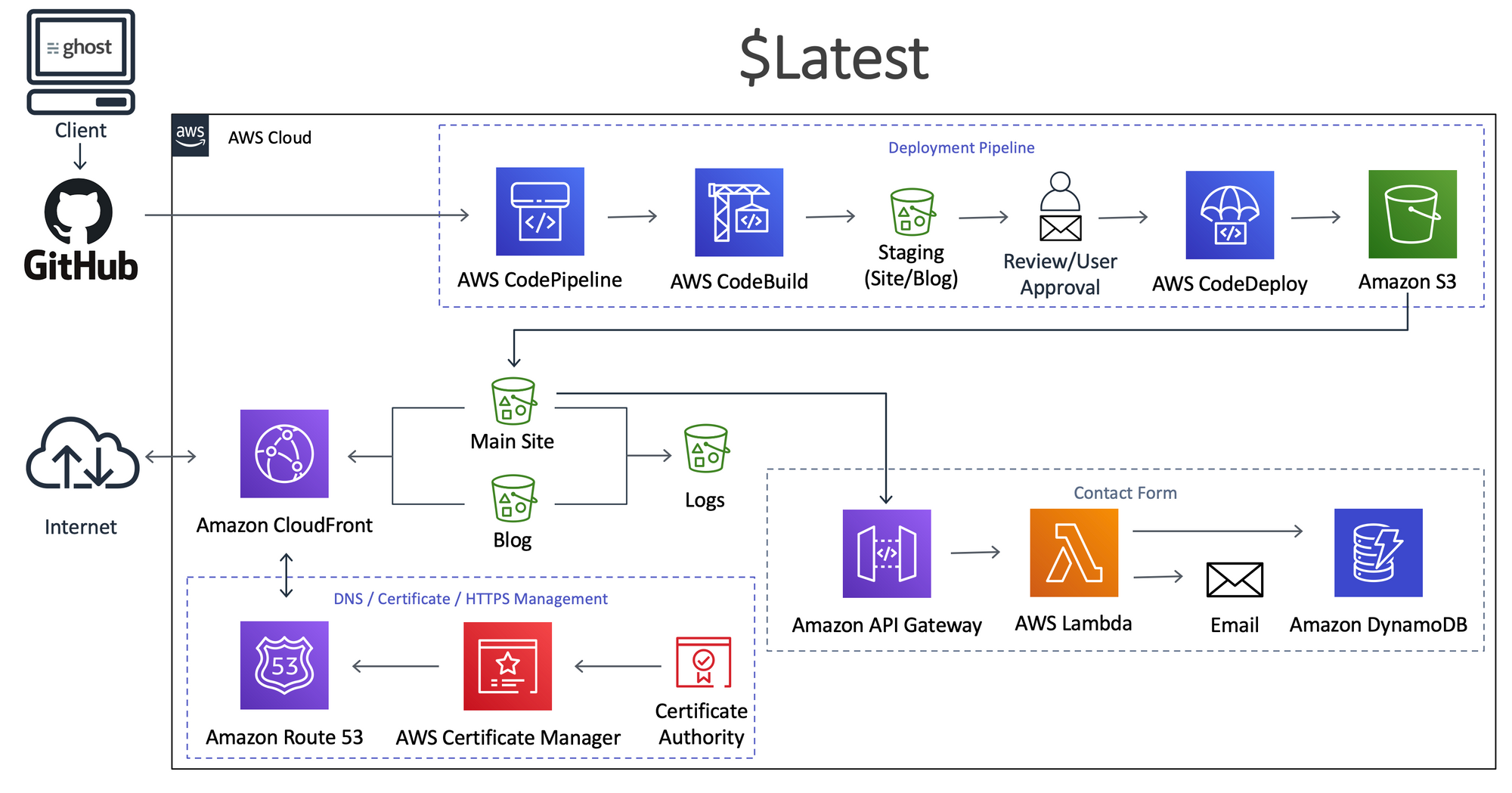

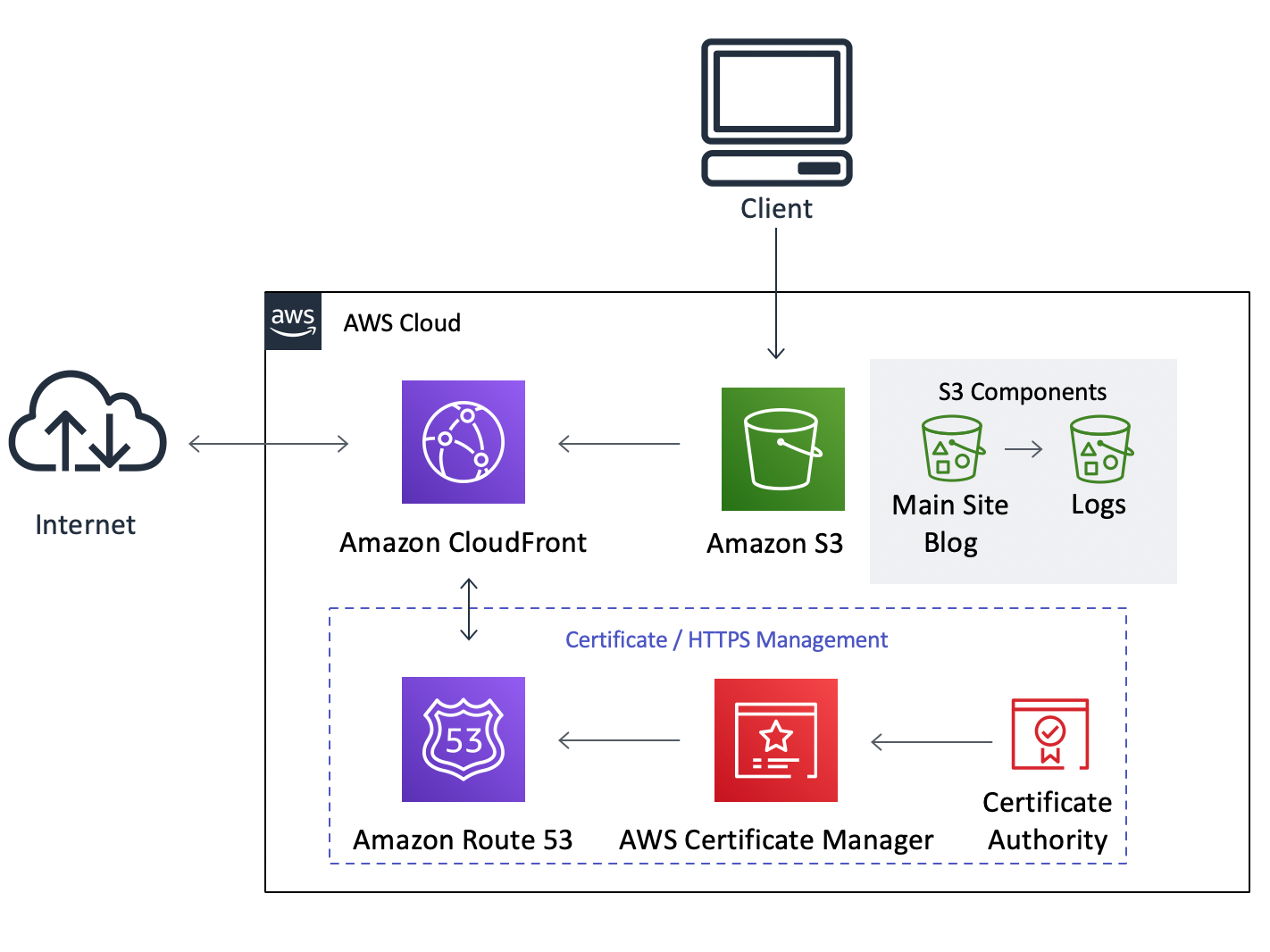

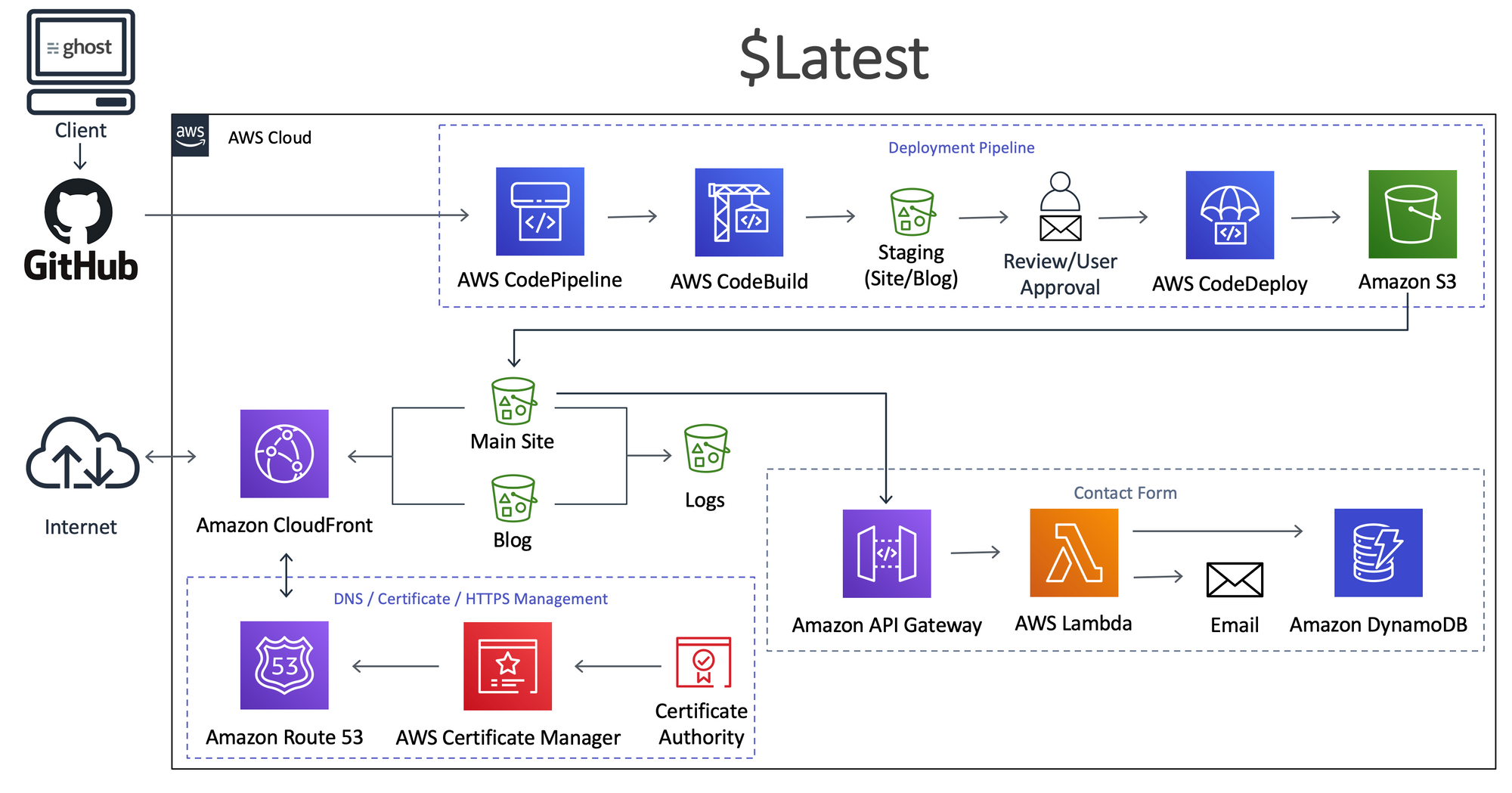

The following implementation is my core architecture that powers my main website and my blog. I publish the static assets to a private Amazon S3 bucket (thou shalt not have a public S3 bucket). Amazon CloudFront, a content delivery network, pulls the assets form the bucket using an origin access identity (OAI) and serves them to the internet. Amazon Route 53 and AWS Certificate Manager provide the DNS and HTTPS for the CDN. Later I did decide to separate the blog from the main site and put this into separate buckets. The main site would be mostly static and wouldn't typically require much change, and I didn't want to manage a full blog in that way.

Doing some research and via a couple of recommendations, I decided to give the system called ghost a try. Ghost is a free and open-source blogging platform written in JavaScript and distributed under the MIT License, designed to simplify the process of online publishing for individual bloggers and online publications. The platform, based on NodeJs, has a handy APIs that you can utilize to generate a blog entirely static so it can facilitate deployment on sites like S3. That gave me the leverage to run Ghost locally on my machine, create the static assets, and then push them to deploy to S3.

That system worked well and all, but I needed a little more automation and wanted to streamline the process in such a way to where I could get some versioning control, previews, and be able to roll back changes easily if I wasn't satisfied with what would be on the site before I made the changes. The following is the Continuous Integration/Continuous Delivery (CI/CD) pipeline and workflow that I came up and use today that gives me that control.

The Workflow

Post Generation and Settings

Starting from my local machine, I use it to host a local copy of my main site and a separate folder for ghost instance of my blog. The key here is that I don't need to keep the aforementioned machine connected to the internet, running, or accessible at any given point of time and only as I need it! When I have a post ready to go, I publish it in the CMS, and then run the static site generator and have it publish to a folder to start the review and deployment phase. You can check out other features of Ghost and the static generator I use.

Review, CI/CD, Testing, and Approval

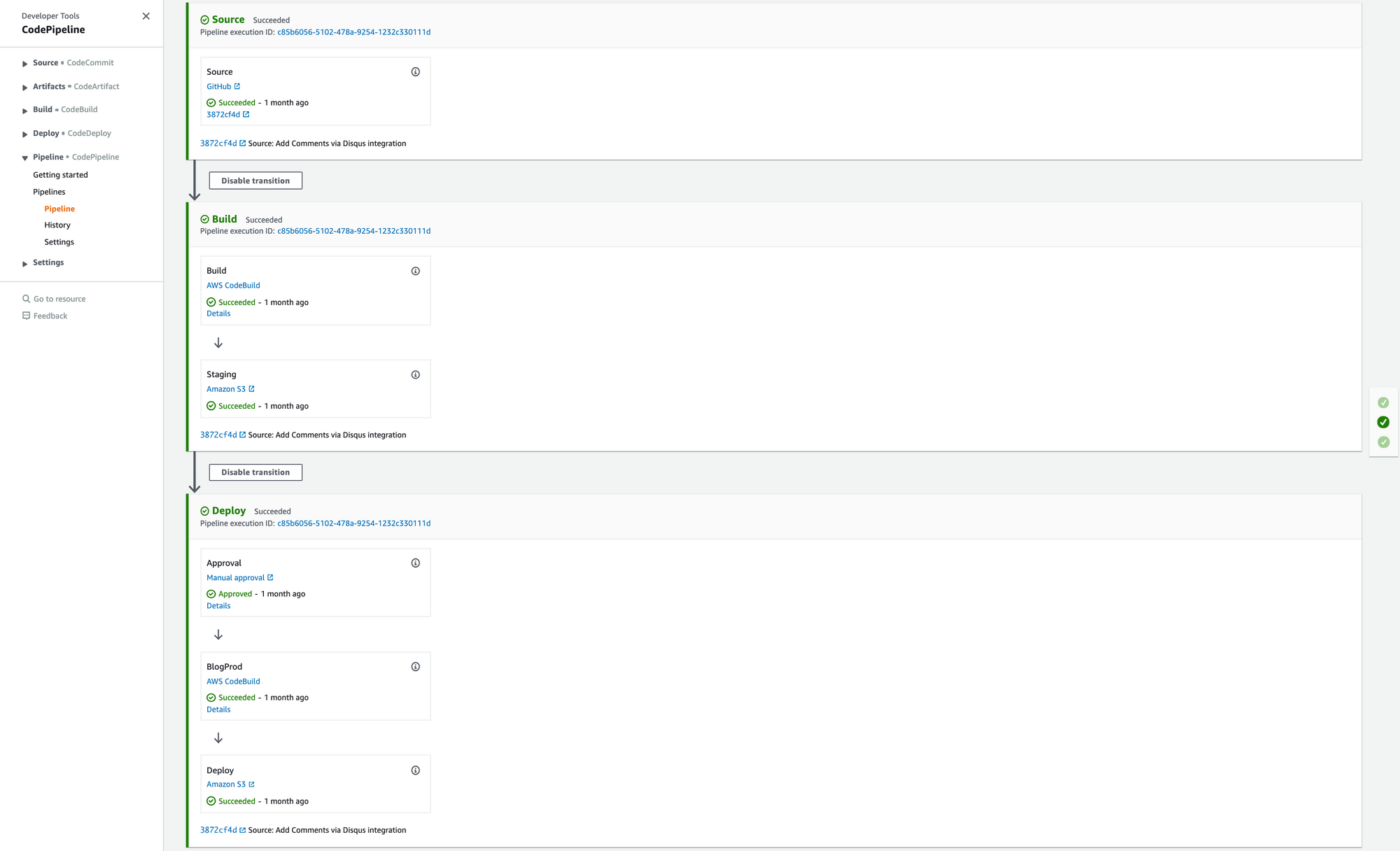

The previously mentioned folder that holds the exported posts and overall blog resides in a GitHub initiated repository. At this point, I could run a script to automate pushing the pages to GitHub with a timestamp or something as the commit message. I manually do this with an alias for now (refer to PEOP above). Once that is committed and pushed, that's when the automation takes over.

I use AWS CodePipeline to facilitate the CI/CD. Within CodePipeline, you can set webhooks to invoke when code makes it to an AWS CodeCommit or GitHub repository. For my use case, I have CodePipeline invoking and using AWS CodeBuild to create a staging bucket to temporarily push the blog to view the changes, how the site would look, and ensure everything works supposed to. It sends me an email to approve or deny, along with a link to view the website.

Publishing

If I am ok with the changes and approve the deployment, it will tear down the bucket and deploy to the production bucket ready for the next CloudFront sync or request. If I am not ok with it, then I deny the deployment, make the changes, and it starts from the beginning. This workflow is the same for the main site, except it's just pushed to GitHub and published to a different bucket.

Costs (As of July 2020)

Fixed Costs (Yearly)

- Domain (Route 53): $12

- DNS (Route 53): $6

Variable Costs (Monthly)

- Amazon S3: $0.023 per GB for the first 50 TB, then cheaper from there.

- Amazon CloudFront: $0.085 per GB for the first 10 TB of data transfer out to the internet, then less expensive from there.

- AWS CodePipeline/Build: $1 if you use it once. $0 if you don't for the month.

- GitHub: $0

A lot of these services are also free tier eligible, so most of these won't even incur costs after the first 12 months. My infrastructure costs end up being about $1.02 per month of variable expenses, and with the fixed costs, it brings my total all into about $2.52.

Conclusion

The negligible costs, implementations, and ease demonstrate why so many organizations and workflows make their way to the "cloud." It has made my life easier. I will continue to iterate on this architecture, make things better, and track future metrics.

Don't let perfection be the enemy of progress!